Tokenizing

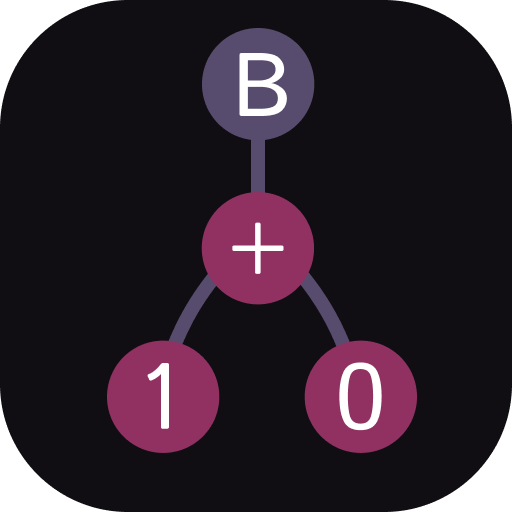

Tokenization is the process of breaking an input string (usually source code or text) into meaningful units called tokens. A token is the smallest sequence of characters that carries meaning in the context of a language, like keywords, operators, identifiers, or literals. Tokenization is often the first step in a compiler or interpreter’s workflow, preceding parsing.

Tokenization is also called lexical analysis, where the input string is scanned from left to right, and sequences of characters are grouped into tokens. This step is performed by a lexer (or scanner), a component responsible for converting the raw input into a stream of tokens.

For example, in the c language, the statement int x = 42; can be tokenized into the following

tokens:

int, x, =, 42, ;.

Tokenization Pseudo Code

For more info visit the dotlr library docs